welcome to cathode ray dude's blog. please be aware that i'm a lot cruder on here than on youtube.

you can subscribe via RSS. point your reader here: RSS Feed

doing nonsense on the computer

-

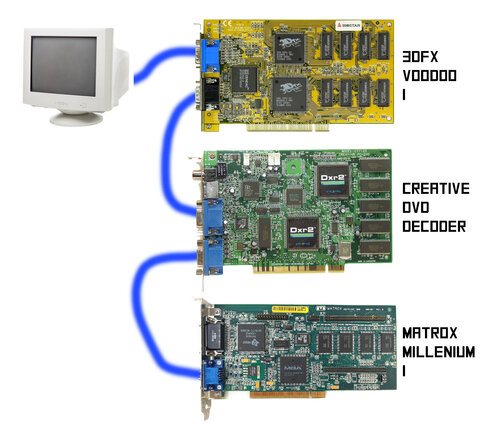

okay here's what we have going on

as you know, the original 3dfx voodoo cards (and i think only those? i think they fixed this by the 2?) could not deliver 2D graphics at all, so you had to hook up your normal 2D card to a passthrough port, and during non-gaming operations the voodoo would simply pass the video straight through to your monitor. when you started a glide game, it would cut off the signal from your 2D card and inject its own.

“then how did windowed mode work” as far as i know and recall, it didn't. go fullscreen or go home

this was not the only class of device that did this, but i'm not aware of many (in fact I might own all… two) and the most common were DVD decoders, like this Creative Dxr2 that shipped in a bunch of Dells, IIRC.

you plug this in like the voodoo - the video from your normal gfx card goes into it, then your monitor plugs into the second port. when you play an MPEG, MPEG2, or DVD with the included app, the bytes are sent to the card, it decodes them, and then it superimposes them on the screen using chroma keying

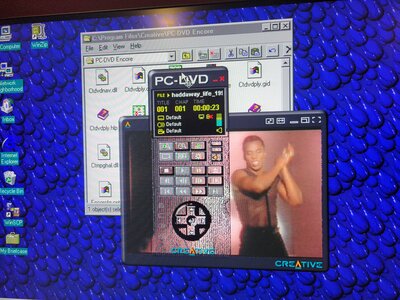

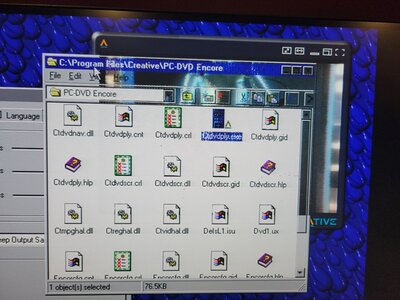

the bundled video player app just displays a bright blue rectangle where the video should be, then tells the card where that rectangle is. the card scales the video to that size and position, generates a VGA signal at the same resolution as your desktop, and then uses the color component of the passthrough signal as a key to blend between them. it literally just bluescreens the DVD over your desktop, it rules. oh! it also sucks a lot

as you can see, out of the box it just straight up doesn't really work. haddaway punches straight through windows of fairly neutral colors, tbh and produces these awful shimmering artifacts in the process. it has a control panel, and if you fiddle with the knobs enough you can get it to be a lot better, but it still tends to shine through in places it shouldn't. this is no “hardware overlay,” let me tell you.

that said, you know how much CPU load there is when playing this 720×480, 30fps MPEG2? 2%, even when fullscreen.

you wanna know how much load playing a 320×240, 24fps MPEG1 puts on this machine? 20%, and if you double the window size, it goes to 70%.

this PC, you see, is a Pentium 133. go just one generation older and you wouldn't even be able to play full motion video without some kind of hardware accelerator, so yeah, even if the keying is kinda rough at times, having the ability to play smooth, full-motion video directly from a DVD, scaled to any resolution up to 1280×1024, with zero CPU impact, was hugely important if you didn't yet have the cash for the Stupidly, Comically Expensive Pentium II.

anyway, for a long time i didn't know these existed; I hadn't ever looked into DVD decoders, never having needed one, so i assumed they just kind of… decoded the video and spit it back at the software to be blitted into RAM? turns out no, it was a 50/50 split between this approach, and devices that used the VESA Feature connector to spit the frames directly into VRAM - but that's a story for another time.

once i discovered that this was a common technique, i immediately wanted to chain it with a Voodoo card, and since i owned one, this seemed like a slam-dunk for a little weekend project! i then let about 16 months go by without doing it, until tonight. now i've done it.

and i have no doubt that i'm nowhere close to the first person to do it, because this combination would have made a ton of sense in 97 or 98. i'm sure plenty of people had this exact setup, and of course the actual effect of this arrangement is quite unremarkable. it uh… it works. you can't tell that anything unusual is going on. or, at least, it works now.

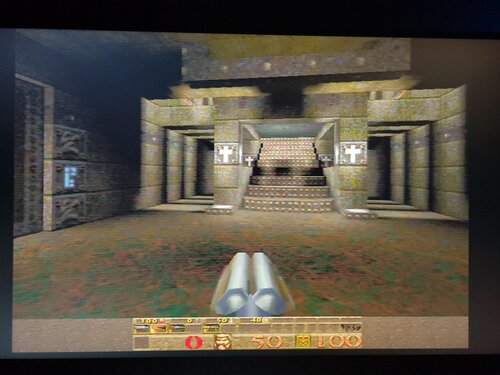

at first it had a problem: while videos played fine and the video from my 2D graphics card looked good, if i fired up glquake, the picture became incredibly dim! like, unplayably dim. and as you may know, adjusting your gamma in the early days of 3d acceleration was often simply impossible, for no reason i ever found out - the slider in the game did nothing, as usual, and since this machine is running NT4, with very rudimentary drivers, there is no 3dfx control panel.

but when i bypassed the DVD decoder and plugged my monitor straight into the Voodoo, it looked fine! well, so, at the time i had the chain set up like this:

Matrox 2D card > Voodoo > DVD decoder

now obviously this satisfied my basic, delightfully devilish intent to have the windows desktop go through two layers of passthroughs before making it to the monitor, and i was pleased (if not particularly surprised) to find that there's no apparent visual degradation, despite going through effectively 18 feet of cable and two passthrough interfaces. however, it did mean that the voodoo's signal was being processed by the DVD decoder, and i figured that was probably the culprit. i swapped the chain around like this:

Matrox 2D card > DVD decoder > Voodoo

and this solved the problem. Quake is now quite playable.

this outcome doesn't surprise me, and here's why: most likely, the Voodoo is passing through the signal using a very simple solid state analog switch, basically just a handful of transistors that gate off one signal and let through another when you pull an input high. those are basically relays, they don't much care what's going through them, within certain tolerances of voltage/current/frequency. when the Voodoo is off, you are getting exactly the same signal that your 2D card is outputting, delta some small amount of voltage drop.

the DVD decoder, on the other hand, is performing some amount of Processing in the analog domain in order to splice two fullscreen images together. there are a few ways to do this, and one would be to use the exact same component: a simple solid state analog switch, gated by the chroma keyer at high speed as the beam races across the screen, sending through either the desktop image or the decoded DVD as appropriate for each pixel. in this case, it wouldn't generally matter what the underlying signal from the graphics card was, but i suspect it's not that simple.

in order for the Dxr2's picture to mesh well with your desktop, it needs to know what the gamut of your card is, what it's black levels are, et cetera so that when it displays “white”, it looks the same as your desktop's “white.” does this differ with VGA cards? i know TV signals vary, but maybe in VGA it's rigidly standardized, white is always 0.7V or w/e and black is always 0.0V or whatever, and no such range adjustment is needed, i don't know.

but if that isn't the case, then it opens the door for disagreement between the devices. would anyone be surprised to learn that the voodoo's RAMDAC is weird? i certainly wouldn't. maybe it uses a weird black level? maybe it has a limited output voltage range? i should look up the VGA spec and see if this is possible, and also put a scope on the output of the two cards and see if there's an obvious difference. i'll do that.. later, sometime, probably.

surely there must be some differences though, because the creative card has an autocalibration process it goes through when you set it up, where it flashes a bunch of gibberish on the screen using the 2D card and (presumably) measures it to figure out the particulars of that specific device. but this only runs once, so if there is a difference between the output of the Matrox and 3DFX cards, it wouldn't know that. it simply assumes that it's connected to the 2D card, so when the voodoo engages, the Dxr2 doesn't know anything has happened and doesn't try to recalibrate - not that it matters, since there'd be no way for it to display the calibration cards without Glide support.

of course, this still begs the question: why is the Dxr2 having a global effect on the image? if it were just using an analog switch on the fly as i proposed, then it would have no control over any part of the image except the area where the key color appears. here, the keying shouldn't even be active, since the DVD player software isn't running, and even if it was, it would have to interpret the entire output of the voodoo as a 50% key signal. makes no sense, that can't be it. but what is going on? all i can do is guess.

maybe the Dxr2 uses an analog mixer chip instead of a simple switch, and the mixer has significant intrinsic loss, which it has to compensate for by passing the output signal through an amp to get it back up to original levels, but maybe that amp isn't linear? the calibration process would therefore exist in order to find out how hot your card's output is, in order to get it back where it started without going too bright/dark. idk, that doesn't feel right.

ah, what about this: maybe VGA doesn't have rigid white and black levels, and in order to bring the signals from the decoder and GPU into the same voltage gamut, instead of adjusting its own signal intensity, the Dxr2 adjusts your card's output up or down to match its own signal. in this scenario, my matrox millennium has a really hot signal compared to the Creative card, so it attenuates it by 50% to match. then when we switch to the voodoo, it continues attenuating by the same amount, resulting in a much dimmer image. eh? eh? sounds good, if only there's any truth to it.

perhaps i will go looking for a technical document to try to confirm this. godspeed, me

![[H] cathode ray dude's blog](/lib/exe/fetch.php?media=wiki:logo.png)

Discussion

This is 100% correct. But even in 1996 you'd still expect most of your videogames to be running in DOS mode, though Windows games were definitely available (notably, several Sega ports that used techniques that are very much not forward compatible with current Windows), so you wouldn't mind. DirectX existed but most graphics cards' APIs were not terribly compatible early on.

The first is definitely true, and I suspect the second is true as well but I wouldn't have means of testing it. The voodoo is set to *only* ever run in 16-bit color mode (similar to the PS1 in that respect) and kinda squashes the color space of the rendered image to compensate for it, which is one of the reasons why gamma tweaking on those cards is so crucial. I am not terribly surprised to find that it causes issues with the DVD decoder card, though I've never been in a situation where I had to manage a setup like this.

it was both voodoo and voodoo 2 cards that did that passthrough thing, if i recall right? voodoo banshee was the first one that didn't, i think. taking this from memory, so take it with a grain of salt.

yeah idk why i didn't look it up lol. just did and yeah, the 2 still did passthrough. the banshee and rush were voodoos 1 and 2 with 2D hardware (of varying quality) added, and then with the voodoo 3 they merged it all together